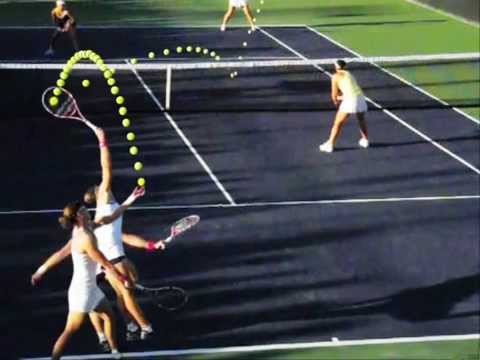

I have found that pictures such as the following are very informative for athletic motions, especially to show arms, balls, rackets, etc. However, these pictures are produced by manually selecting portions of high speed video frames and using Photoshop layering to assemble the composite. I don't do them myself so I can't say how much time is involved to produce a composite picture from a series of frames from a high speed video.

These composite pictures of selected video frames are being posted lately in the Tennis Talk Forum by Anatoly Antipin ("Toly").

In a Tennis Talk forum reply he says that he uses Kinovea to select single frames, convert and save as .jpeg. He then uses Photoshop and Powerpoint. He uses the Photoshop multiple layers technique. I don't produce these myself so I can't provide much more information on the process.

These pictures are one of the best ways that I've seen for showing athletic motions. I'm noticing things that I never noticed before. Especially, the rare Fuzzy Yellow Balls videos of the server taken from above show some very interesting details. Also, where shown, the ball on its trajectory and the camera frame rate can provide timing. See the ball's trajectory and ball spacing in the tennis serve.

If anyone has samples of similar display methods, please post along with some of your techniques.

Youtube from Anatoly Anitipin showing composite of selected video frames as part of the video.

http://www.youtube.com/watch?v=QUwxiqFUi58

Videos

http://www.youtube.com/channel/UCVtnV90bBCB50nkd8EQDFOQSome other composite pictures of video frames.

Stosur toss and impact location.

Rare serves from above showing racket and hand movement. See FYB Youtube videos. http://www.youtube.com/watch?v=2FpeYGG9XAg and others from above.

Serve showing when racket goes from edge-on to the ball to impact, lasts ~ 0.02 second, and some of the follow through. This is the internal shoulder rotation that contributes the most to racket head speed at impact.

Composite picture of Roger Federer forehand.

I discussed the issue with someone who mentioned a video processing technique that saves, for example, the brightest pixel occurring in a series of video frames. For example, suppose a tennis ball travels with a tennis court as the dark background. The processing would see the tennis ball as the brightest pixels at a different location for each frame, save for each frame and display all ball images in a later composite video or still. If the frames were made into a composite picture the trajectory of the ball is shown and the distance between the ball images gives an indication of time or velocity. Tracking does not do that. This result, in theory, could produce results similar to the above composite pictures, but the result would be produced by the computer and not manually.

The technique might also work for saving the lowest brightness pixels from a series of frames. Dark objects on light backgrounds would be the best candidates.

This technique has been applied especially to some soccer where the ball's trajectory appears.

https://www.youtube.com/watch?v=l7l5YKssHPg

[video]https://www.youtube.com/watch?v=l7l5YKssHPg[/video]

http://fcl.uncc.edu/nhnguye1/balltracking.html

Also, since in athletics most objects move in one direction, saving or processing the forward edge of most objects might be informative and allow more frames to be displayed. This has been done in the composite frames above by manually layering using Photoshop. Transparency and colors might be useful capabilities.

Often a video frame or still that shows just 3 frames - the current frame along with object positions of the frame before and after (color coded? transparent?) can be very informative. If objects from the frame before and after were always also displayed, it would make important observations more apparent. When stop action single frame is normally used you have to remember the positions instead of seeing them.

If anyone has related links or experience in this topic, please post.

The main idea would be to produce informative video or still composite displays like those above but without the time required by the manual processing.