Hi,

It is a very interesting piece of technology, and every experiment is welcome !

Personally, I feel I don't have the time to fully dive into this, considering the ammount of tasks already on the todo list. But if any one is interested please experiment ! Report your findings, brainstorm about how it could be used, etc.

1,426 2011-02-04 00:04:41

Re: Using Kinovea with Microsoft's Kinect camera: Is it possible? (6 replies, posted in General)

1,427 2011-02-03 17:17:35

Re: Using overlay with zoom? (9 replies, posted in General)

What would still be needed: the possibility to make a sequence of overlay photos. Now the sequence moves only in the other video...

Hum… Trying the various export buttons on the individual videos indeed reveal several inconsistencies.

- export image : both images blended.

- export sequence : sequence of the primary video, blended with a fixed frame of the other video.

- export video : just the primary video, the other video is not blended at all.

- special exports (key images, time freeze) : same as export video.

Now there is some logic to the fact that the buttons on the individual video screens are only exporting this specific screen's video and images.

It keeps the function of the button similar to when there is only one screen.

Another glitch : when blending is enabled and you use the "dual export" (on common controls bar), it exports the side by side video, including the blending, which is redundant.

What about:

- If the blending is enabled, then the "dual export" video will actually export a single, merged video.

The logic would be that :

- Export buttons on the individual screens works on the individual videos. (same function as if there was only a single screen, no blend)

- Export buttons on the common controls bar :

--- If no blending, works by creating side by side images or videos.

--- If blending, works by creating blended outputs.

Then, a sequence button could be added to the common controls bar to implement sequence export. (side by side or blended depending on the current option)

?

1,428 2011-02-02 10:42:07

Re: Error when trying to save in dual screen mode with version 0.8.11 (14 replies, posted in Bug reports)

Thanks for the test!

I have reopened bug 227 - Could you attach the logs there?

Thank you.

1,429 2011-02-02 10:29:04

Re: A suggestion on the origo (4 replies, posted in Ideas and feature requests)

Would it be ok to have an option on trajectories to "Set the origin of coordinates to follow this track" ? (or similar wording)

(I don't know how hard it will be to implement, but that would avoid creating a new object / tool.)

I know this kind of trick is also used in pure mechanics, to express the trajectory of a point relatively to a moving coordinate system. (for example, the edge of a moving wheel relatively to the center).

(pyMecaVideo, for Linux, can do that)

In sports, this could be useful to express, for example, the hand motion relatively to the elbow, the elbow relatively to the shoulder, foot to knee, etc.

Regarding stabilization of hand held videos, I had started working on it from another approach. For the more advanced visual effects, the motion of the camera has to be computed from the way the background change over time. This can be used to align images over each other by reversing the computed motion.

It only works when the camera is looking straight ahead though, but it could be helpful.

1,430 2011-02-02 09:47:36

Re: A suggestion on the origo (4 replies, posted in Ideas and feature requests)

I'm afraid I'm not sure what an "Origo" is ![]() Can you elaborate ? The origin of coordinates ?

Can you elaborate ? The origin of coordinates ?

1,431 2011-02-01 21:43:18

Re: Using overlay with zoom? (9 replies, posted in General)

This has been implemented in the last version (0.8.12) - by allowing whole image dragging even at normal size (no zoom). (and application of the change for the copy on the other screen)

It is only active when blending between the two videos is active for now…

Also, currently you can't zoom out to less than 100%… maybe it is limiting the usability of the feature…

Thoughts for improvements ?

1,432 2011-02-01 21:31:50

Topic: Version expérimentale - 0.8.12 (18 replies, posted in Français)

Version expérimentale : elle a besoin de vos retour d'expérience pour s'améliorer !

L'installeur est dispo ici : [s]Kinovea.Setup.0.8.12.exe[/s] - Voir 0.8.13

Changement principaux :

- Mise à jour des traductions pour le Hollandais et l'Italien, merci à pstrikwerda et giobia !

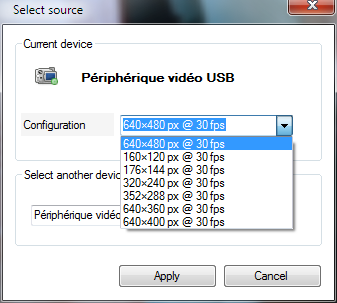

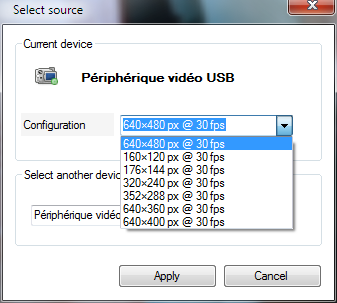

- Il est maintenant possible de configurer la taille image et la fréquence pour la capture (selon les capacités de la caméra).

- Les coordonnées des marqueurs en croix sont exportées dans les fichiers tableurs.

Correctifs : bugs 183, 216, 227, 228, 230.

Plus de détails dans le changelog (en).

1,433 2011-02-01 21:13:57

Re: Error when trying to save in dual screen mode with version 0.8.11 (14 replies, posted in Bug reports)

Someone that used to experience this bug, please retry with the latest version (0.8.12) to confirm whether it is corrected or not.

Thanks !

1,434 2011-02-01 20:54:10

Topic: Experimental version - 0.8.12 (2 replies, posted in General)

This is an experimental version : it needs your feedback to improve.

The installer is available here: [s]Kinovea.Setup.0.8.12.exe[/s] - See 0.8.13

Highlighted changes:

- The Dutch and Italian translations have been updated thanks to pstrikwerda and giobia.

- You can now configure the frame size / frame rate combo for capture (depending on camera capabilities).

- Coordinates for single points are now included to spreadsheet exports.

Fixed bugs : 183, 216, 227, 228, 230.

More details in the changelog

1,435 2011-02-01 15:06:52

Re: Deinterlace interlaced videos to 50 frames (1 replies, posted in General)

Oh, I didn't realize there was options, thanks for the heads up.

I'll try to look at this when time permits. If it's possible have a deinterlace mode that reconstruct full images from each field, then it will surely become the default.

edit:

The Yadif deinterlace algorithm is actually implemented in the new libavfilter library, which Kinovea doesn't include yet. The internal deinterlace will just reconstruct a frame from the first field.

There are other interesting things in libavfilter (notably the rotation filter) so sooner or later it will be included.

1,436 2011-02-01 14:13:44

Re: Romanian translation for Kinovea 0.8.7 - it isn't too late, is it? (1 replies, posted in General)

Hi, thanks for your support.

I think it is best if you can concert and organize with Bogdan Paul Fr??il? for this, as he is in charge of the Romanian translation.

Please contact me by mail at joan@kinovea.org so I can connect you to him.

Regarding the release cycle and translation, it goes like this :

1. A version is release (say 0.8.7)

2. Development phase (as new features are implemented new strings are constantly added, removed, changed, so it's better to wait before translating them).

During this phase, experimental versions are released for testing, feedback, etc. (0.8.8, 0.8.9, etc.)

3. Feature freeze (no more features will be added for the next official release)

4. Translation phase (dedicated to translating all the new and changed strings since last official release, updating user manual, etc.)

5. The new version is released.

We are currently in step 2, closing on 3.

1,437 2011-02-01 11:45:13

Re: Speed Presets in Replay and Delayed Capture (1 replies, posted in General)

Hi,

For the speed slider you can jump to the nearest 25% spot by using CTRL+Up arrow or CTRL+Down arrow.

I'll add the rest to the suggestion queue.

- A shortcut to change the live delay in capture screen. (could use the same CTRL+arrows to jump by 25% steps maybe ?)

- A shortcut to skip back in time a given ammount of time - (if currently playing keep playing, if not, stay paused).

Currently, the Home and End keys will jump to the begining / end of the current selection. Ideally, page up / down could have been used for jumping back/forth 5s, but they are already used to change the active screen when two screens. We may solve this conflict by expecting the CTRL modifier for one of the function… ?

Any feedback appreciated ![]()

1,438 2011-01-28 18:09:40

Re: New feature : Observational References (19 replies, posted in General)

Yes, it will only consider files with the .svg extension for inclusion in the menu.

I see there is a check box in Inkscape's save dialog to "automatically add file extension", do you have it checked?

The menu will update in real time in the next version, by listening to changes in the "guides" folder.

(Also, semi-hidden feature discussed in page 1 of this thread, you can create subdirectories to group the files to your liking.)

What would be really awesome is if there was a central place where users could publish and share these files, and improve them by collaboration. And, it would facilitate diagnostic for issues related to them too.

I find the manipulation of the objects sub optimal though. (handles in corners often get out of the picture, dragging work only if exactly on part of the object, etc.)

If any one has ideas to improve the manipulation, don't hesitate !

1,439 2011-01-28 11:55:33

Re: Snap to horizontal/vertical (2 replies, posted in Ideas and feature requests)

Ah yes, nice idea. Thanks for the suggestion.

I'm thinking maybe the Shift key modifier ? (I think it's like that in Photoshop and maybe other programs, additionally, Shift + free hand pencil will draw straight lines, it could be intersting too.).

1,440 2011-01-26 22:51:38

Re: New feature : Observational References (19 replies, posted in General)

Thanks!

I'm having the same behavior.

When saving from Inkscape, everything looks fine, no error message, but then, when going to the actual location in Programs files through Windows Explorer, the file is not there.

(Although if reopening Inkscape save dialog, the file is visible!)

I think this is folder virtualization feature in the works as I suspected above. In Vista and 7, programs runs with normal user privileges (even on an administrator account) so when Inkscape tries to writes to Program files directory, it is transparently redirected to another, safe location.

The files are virtualized into %localappdata%/VirtualStore