From your description, you have 2 videos of the same event, but they don't last the same duration. Is that right?

If so, it would seem Kinovea cannot parse the framerate of one of the video correctly.

When playing 2 videos, the program will use the framerate of each video independently and you shouldn't have to worry about it. (a 10-second scene will last 10 seconds whatever the framerate of each video).

In any event, I don't think you would be able to adjust the framerate mismatch by tweaking the slowmotion value (it will probably not be an whole number).

You can adjust the slow motion by 1% increments with the UP/DOWN arrow keys, but as you noticed, this change will impact both videos (it is a recent change, but it makes sense, the framerate should be irrelevant with regards to slowmotion, and we couldn't find real life scenario where one would need to compare a slowed down video with a real time one.)

Could you identify the one video that is not playing for the right duration? (It should behave like this even when played alone in single screen view).

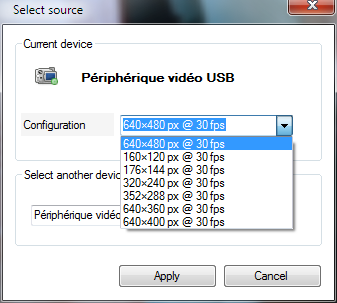

With informations on format, etc. we can try to see where the problem is.

![]()