Hi,

Yes good markers will go a long way to improve tracking, especially in case of complex background.

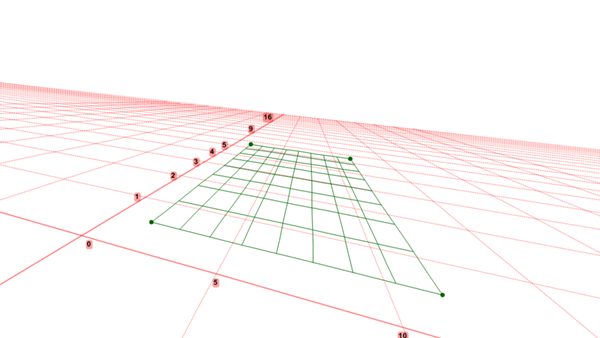

If you are not using version 0.8.22 already then I suggest you try it because it lets you can change the size of the tracking windows (object window and search window).

For the marker design themselves, if size permits, you can use two concentric black and white rings, a bit like an archery target only with black and white stripes. The ring shape is to make them invariant to rotation.

If the video is already on YouTube you can add it like this for example:

[video]https://www.youtube.com/watch?v=20wOlps_Nj0[/video]